Project Overview

- Overview: ClimateWins, a fictional nonprofit organization, wants to make meaningful predictions about climate change and understand its future consequences. Using real data collected by the European Climate Assessment and Data Set project, this project aimed to use various supervised machine learning algorithms to predict the weather in mainland Europe.

- Motivation: Determine the utility of supervised machine learning to predict the occurrence of “pleasant weather days” in various cities across Europe.

- Agenda: Evenutally create a viable model to forecast the occurrence of extreme weather events in Europe and across the world, first through asking:

- Is machine learning applicable to weather data?

- What ethical concerns are specific to this project?

- What have been the historical maximum and minimum temperatures in the areas of concern?

- Can machine learning be used to predict if weather conditions will be favorable on a given day?

Data Details

- Data Source: European Climate Assessment and Data Set project

- Data collection period: 1960 to 2022

- Tools, skills and methodologies:

- Python utilized for gradient descent optimization and supervised machine learning algorithms.

- Libraries used included pandas, NumPy, matplotlib, seaborn, and sklearn.

- GitHub repository for scripts and other analytical details found here.

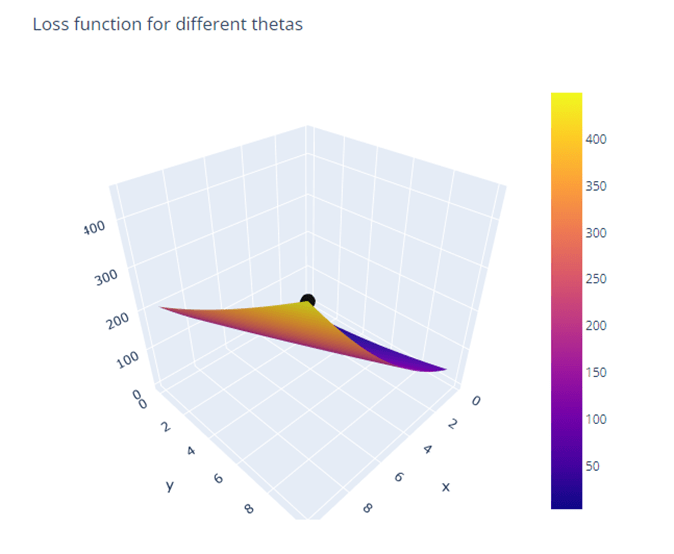

Gradient Descent Optimization

- Started with three different weather stations over three different years.

- Performed numerous trials to attain optimized parameters including theta 0 and theta 1; the number of iterations, and the step size.

- Plotted theta values and loss function across iterations to see whether the values converged and how.

Supervised Machine Learning

- K-Nearest Neighbors (KNN)

- Adjusted the number of neighbors, trying models with 3,4,6 and 10 neighbors.

- Attempted other techniques like undersampling and distance-based weighting.

- Assessed model performance through examination of mutliabel confusion matrices and various performance metrics, including overall accuracy, recall, precision, and F1-scores.

- Arrived at test accuracies that never went beyond 45%.

- Decision Trees

- Ran models without pruning and then using a pre-pruning technique.

- Attempted other techniques such as class weighting and Synthetic Minority Oversampling Technique (SMOTE).

- Assessed model performance through examination of mutliabel confusion matrices and various performance metrics, including overall accuracy, recall, precision, and F1-scores.

- Arrived at a test accuracy of 93% using the pre-pruning technique, however at the cost of class imbalance.

- Artificial Neural Networks (ANNs)

- Adjusted various hyperparameters including simple to complex layer architecture; the number of iterations, learning rate, tolerance, and alpha regularization.

- Assessed model performance through examination of mutliabel confusion matrices and various performance metrics, including overall accuracy, recall, precision, and F1-scores.

- Came to the best test accuracy of 64% after utilizing a simpler layer architecture (50,50); 500 iterations, and a tolerance of 0.0001.

- Once again, this model demonstrated significant issues with class imbalance, working better for the well-represented weather stations, and much less so for the underrepresented ones.

Conclusion and Takeaways

- Out of the three different model types tested, the decision tree ended with the best overall test accuracy, at 93%.

- However, as mentioned before, the model underperformed with stations that were less represented.

- Future steps to improve model performance would include utilizing and iterating upon various measures to ameliorate the inherent class imbalances, including applying class weights, random oversampling, and SMOTE, to name few.

- For more information, please find the linked presentation below.